A while ago, I was more closely introduced to a range of leading survey-based listening platforms such as CultureAmp, Qualtrics, Enalyzer, QuestionPro, Effectory, Survalyzer, Perceptyx, and a bunch of their friends. Mostly seeing them through a lens of measuring employee engagement.

I was very impressed by their capabilities and ease to set up surveys, and send, collect and compare the data. CultureAmp for example has a massive amount of data and benchmarks to explain how your scores compare to other companies in your industry. Qualtrics has enlarged its capabilities to analyse open texts, by a 2021 acquisition of Clarabridge. Lattice enables nudges, as does Perceptyx via their recent acquisition of Humu. By the way, while admitting having lacked actionability for over a decade.

This blog’s focus is not to hone in too much on the differences between surveys and the CircleLytics Dialogue, other than sharing and explaining the following.

A two round/three-step dialogue approach vs a one-step survey

Dialogue is a structured three step approach, compared to surveys that are designed as a one step approach. Via surveys (mainly closed-ended) questions are sent, answers collected and graphs, natural-language-processing-based textual analysis and reports are produced.

During the second step of a CircleLytics Dialogue, however, employees are more deeply engaged to read and learn from coworkers’ diverse answers to your open questions. They rank these answers and as a third step, enrich them by saying what to do next, ie they give meaning and understanding to others’ answers. This way seriously co-create a company’s next step and basically: solve your question ánd experience to be closer to decision-making (this BCG article dives into this). Your question about fostering trust, increasing retention, simplifying work, progressing on DEI, reducing costs, innovating the company’s offering, etc. Anything. People literally spell it out. It’s the question you ask, that drives the answers you get. Managers love it, and employees love it. And that’s worth a lot. That’s the result of collective intelligence.

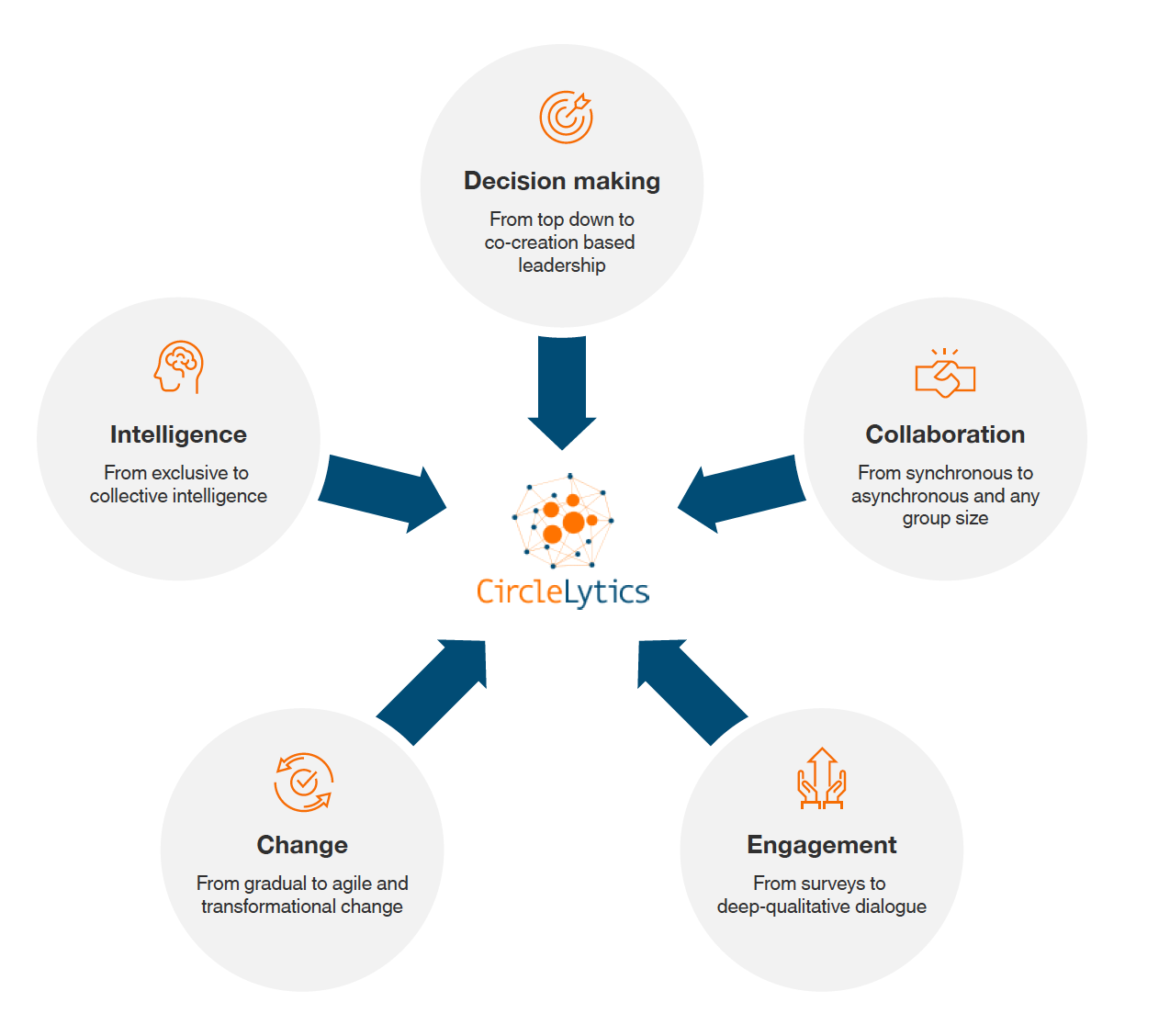

To us, nowadays listening is driven by the need for an increase of bottom-up decision making, asynchronous collaboration, collective instead of small-group intelligence, elevate people’s engagement and to induce people’s openness to (constant) change.

To us, nowadays listening is driven by the need for an increase of bottom-up decision making, asynchronous collaboration, collective instead of small-group intelligence, elevate people’s engagement and to induce people’s openness to (constant) change.

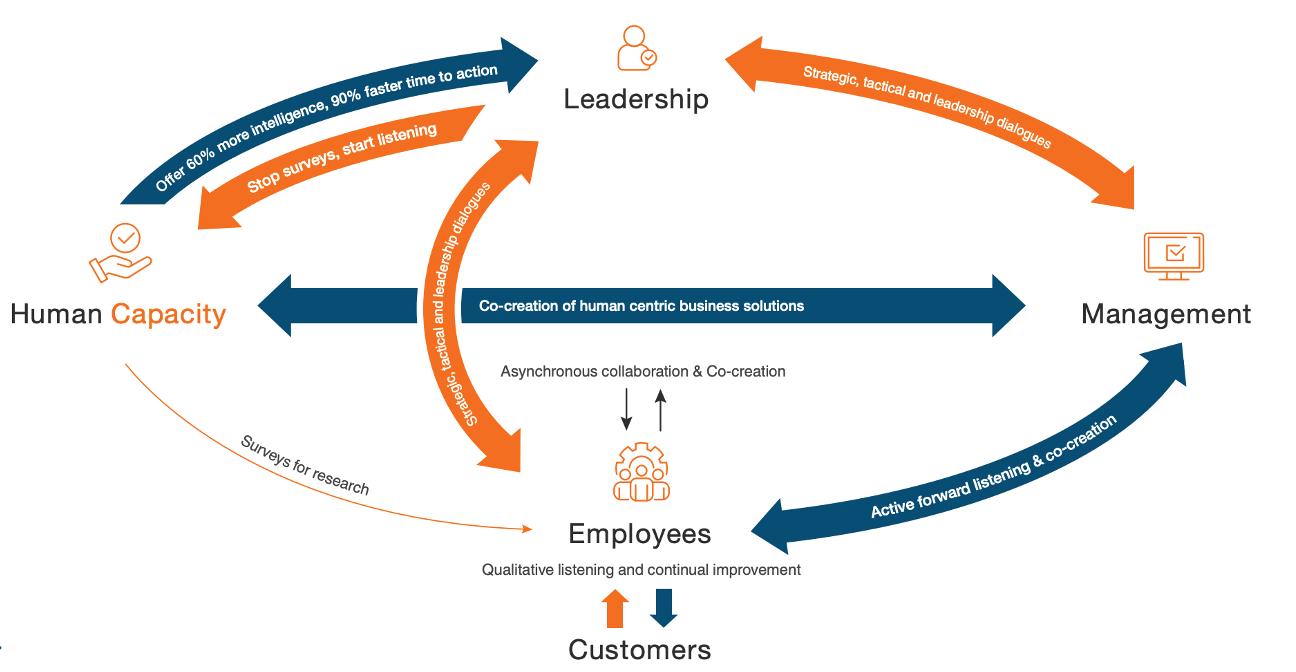

You can read here more about our view on people, on change and on leadership (we’re included in this human-first approach to change IDC paper). You will read here about our view how qualitative listening, through dialogue, fosters an engaged performance culture and elevated decision making. Qualitative listening via a two-step approach gets you a 60% more reliable result than through surveys, about what’s really going on, why, where and, most important: what to do next. This reduces time to action by 90%. Furthermore, dialogues score high on experience for employees, whereas surveys are dealing with survey fatigue and most of all fatigue caused by a lack of action. Dialogues score a 4.3 out of 5, by 100,000s of respondents by now.

Why are surveys still here?

Reasons for companies and HR leadership to still apply survey (single-step listening) technology may vary, but usually consist of things such as:

- internal reporting systems are technically interconnected with this platform

- benchmarking throughout the years with previous results

- availability of external benchmarking (sector, country)

- HR and others are used to this platform

- there’s more stuff going on on the listening platform, eg CX, etc

- the contract is still running.

And one reason we were recently told about, by an insider in the HR Tech industry: “survey based technology masks that leaders don’t really want to listen to what’s going on to avoid addressing issues“. What we instantly added: well, then these leaders neither want to listen to opportunities, ways to move the needle, solve problems… Listening to employees does not equal ‘listening to more problems’ nor ‘doing what they say’ but it does mean listening to solutions, listening to things that will risk your strategy, listening to things that can be more simple or reducing costs, etc. It’s the power of the questions asked, the framing of the context, that determines the outcome. As well, as when you step up your listening game, and add qualitative listening & multi step dialogue to your listening portfolio.

We never read nor hear back, nor have found any academic research that:

- employees simply love surveys, feel taken seriously and invest themselves deeply

- managers can instantly, seamlessly make decisions based on survey outcomes

- CFOs underwrite the high and explicit return on investment of money spent on surveys

- retention, trust, engagement, etc are significantly increased by survey-based listening.

Unfortunately, the investments in survey technology to engage, commit and retain employees remain unproven, except for incidental cases, exhibited on survey platforms’ websites.

We do believe that upon introducing qualitative, forward listening, connecting people with others, and them to true company challenges, companies can reposition periodical surveys, framing it as ‘doing research’, since that’s the primary focus. This might induce employees to check all the survey questions with even some pleasure, simply because they’re helping out their employer to do research, while they know that voicing their opinions is done elsewhere, in an other way.

The history of surveys explains today’s ways of doing

Did you know that surveys versus interviews and open-ended questions were, some 80 years ago, already the lesser means to an end to collect better data? Surveys gained traction only because of the speed and ease of processing closed answers, to measure the population’s sentiment; as input for government policy making during war. Not for reasons of quality, not for reasons of depth. Nor were surveys ever meant to make impact in any way on the respondents themselves: not on their thinking, not to spark creativity, not to rethink and learn from each other. Doing research is the primary, and maybe only, focal point of surveys.

After the war, surveys gained further traction to “measure anything” and find new markets post-war, as many companies had to do. War propaganda agencies turned to PR. Oil companies influenced building infrastructure to boost the automotive sector. Nowadays, people demand more than surveys and fatigue has set in, quite strongly. People want influence, co-creation, to be taken seriously, to learn from others, to better collaborate, and move forward collectively to keep their job instead of losing it. And technology is finally available to convert one-way-street surveys into interactive, qualitative dialogues, hence creating a new category of listening to people, and to build better companies with, through and because of employees and customers.

The old top down model failed, here’s our take from now on

We believe (and witness at many customers’) that bringing employees closer to what’s going on, diagnose problems, predict market trends, design solutions, simplify complexities, etc, not only increases trust and engagement, but also brings companies and leadership closer to success.

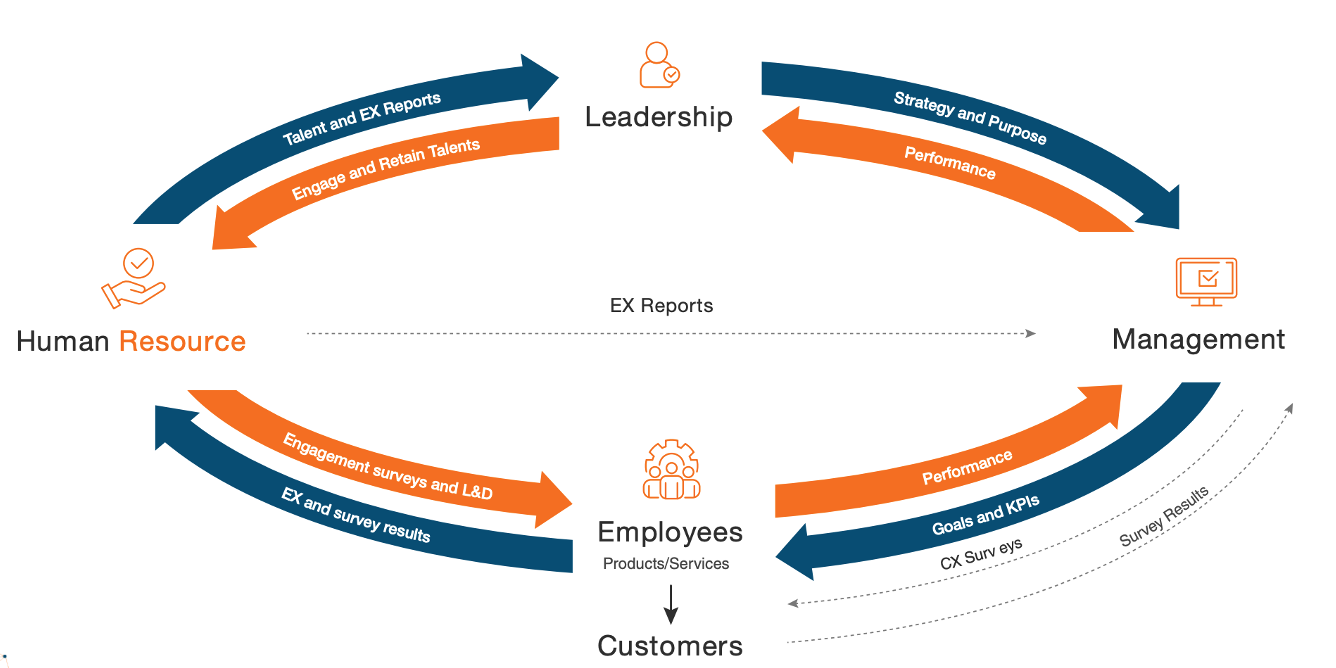

Take a look at this traditional ‘old’ model of leadership, listening and getting things done:

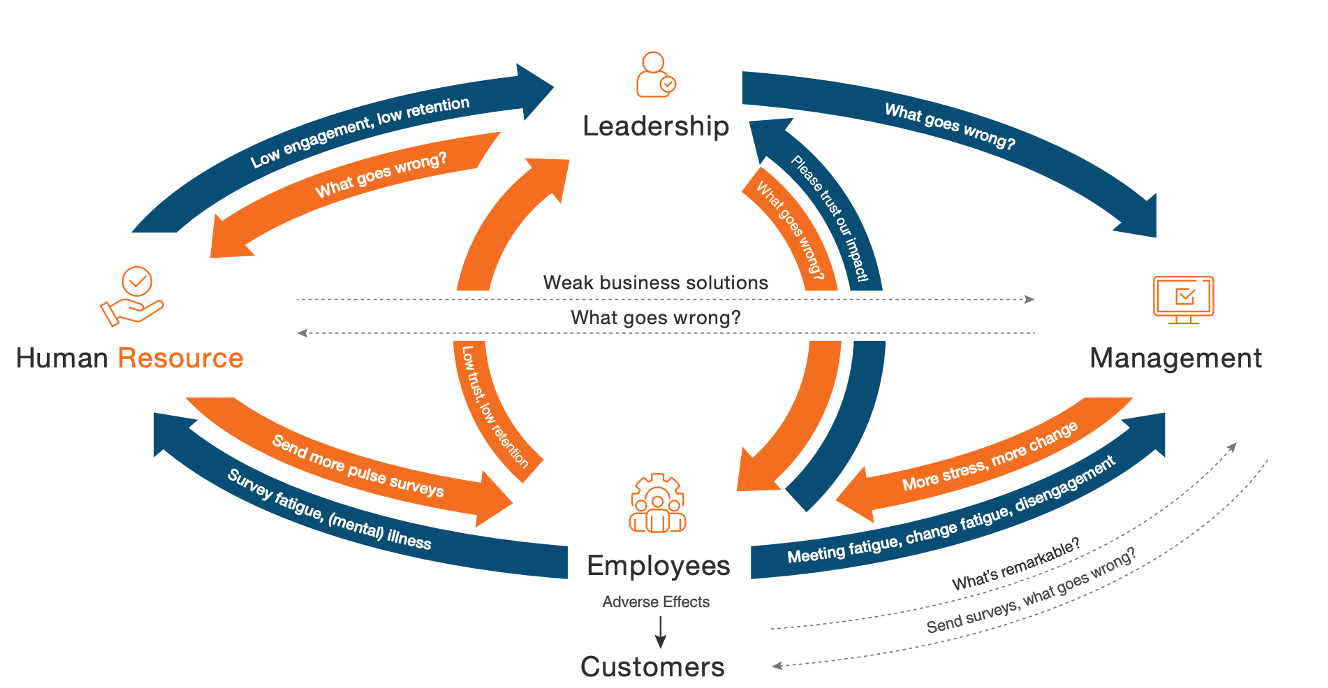

And what it brought us: disengagement, attrition, survey & meeting & change fatigue and a lack of trust.

Then take a look at this new model of leadership, co-creation, dialogue and to bring employees and customers closer to the company’s purpose, decision-making and daily improvements:

Given this latter, new model, listening to us means active-forward listening. In this context, you will understand why we redesign the way companies’ leadership, management and HR listen to and co-create with people.

Two strategies to elevate your company’s listening

We will examine and explain how to do this via two different strategies.

- stop surveys and move listening forward: combine specific and generic closed, specific open, and specific closed/open ended questions in platforms such as CircleLytics Dialogue

- keep surveys for now, with generic closed-ended questions, yet combine with dialogue. Either via a separated approach: survey results are used to select and prepare separate dialogues; either via a (zero, semi or full technically) integrated approach: survey results trigger a dialogue

Our collective intelligence and AI driven employee listening is, compared to surveys, a much-needed and timely step for HR, leadership and management to take, also considering Josh Bersin’s latest, quite shocking research. After all, it’s these days all about co-creation with employees and processing business-and people critical insights faster than competitors, to obtain high-quality employee data tailored to solutions to enable managers and people to perform. To engage as a verb, more than measuring engagement as a noun. Collective intelligence emerges when respondents are enabled to interact with each others’ thinking, solutions and ideas. This aspect of collaborative and network-based learning is what differentiates CircleLytics Dialogue from literally any survey technology and has put us seriously on the map and on our customers’ and analysts’ radar.

Stop survey-based technology and shift to dialogue

Let’s examine the above-mentioned first strategy (1), hence, to stop survey based technology, and move your listening spending forward and elsewhere.

CircleLytics Dialogue offers a unique, intelligent QuestionDesignLab with 3,000+ open-ended or open/closed-ended questions, covering all business and people critical topics and themes. Any question can be deconstructed and re-constructed into a better one, more specific and tailored to your people, your business, your customers. After all: everything is specific, and in specificity lies the uniqueness of what you do and sets you, your employees and company apart from the rest.

For this reason, CircleLytics Dialogue does not believe in external benchmarking, nor do we offer this as a consequence. How can you add specific value for your people and company, based on generic information about how other companies score? If trust scores 7.4 in your company, and 7.6 is the external benchmark, how can you compare these apples and oranges? Is there at least a clue? Or is 7.4 ‘high enough’? It is people and business critical to know what théy say and feel about trust, and what théy recommend to raise the bar at théir company. You don’t need an external benchmark to take them seriously and select leading recommendations to improve trust. And people certainly don’t need to be ignored for their recommendations to improve trust, just because the generic question scored above some industry benchmark. That’s not about listening, that’s about ignoring…

So let’s zoom in on a question. Let’s pick this one, from the CultureAmp much-promoted engagement survey.

“I have access to the things I need to do my job well.”

A few things we notice:

- this changes throughout the day, the week, depending on tasks and project phase

- this depends on my role, that might have changed a number of times

- this depends on my coworkers and manager(s) that as well change over time

More important:

- it’s an important question to be asked by my manager, eg throughout the month

- it’s an important question to follow up on in, let’s say, days since it impacts performance

- it’s a question to ask ‘in the moment’, about a present situation.

Remember, we’re biologically wired to forget things that happened. Not to remember. Asking people for input about things that happened weeks or months ago, is just a recipe to outcomes you can’t rely on, nor consider it reliable input to judge managers.

Our question design team suggests to change this question into:

“Do you currently have access to the things you need to do your job well, and can you explain or express your additional needs?”

And …. have this question asked by managers regularly, not via HR’s generic and periodical survey. Managers can this way show they care and know what actions to take. This saves 90% time to impact. Employees feel this action-focused listening, commit to answering these questions and vote up others’. Everyone is set to go! And employees love this approach and rate it 4.3 out of 5.

Ask employees to score this question (the same as for the original CultureAmp question). And enable them additionally, through the power of dialogue, to vote coworkers’ textual answers in the second step up or down, and add tips/recommendations for specific actions to take.

Employees get to:

- express their opinions (as we know, this directly impacts their engagement and trust)

- learn from coworkers how they see things differently and experience this connectedness

Managers get:

- answers that were supported up by the group, with their tips/recommendations

- opinions that were rejected by the group, and reasons why.

Everybody happy. Managers can implement things to improve, and avoid things that are rejected by the group (voted down).

To make HR happy as well, this improved question can be asked by HR and they facilitate these type of action-oriented questions being asked on behalf of all managers of all teams or departments. The scores and overviews in the dashboard still help HR to track scores over time, and the CircleLytics Dialogue dashboard creates meaningful reports and insights based on these vetted qualitative employee listening data. HR tailors to managers’ needs and enables them to engage their employees.

Look at this other (beautiful) question from CultureAmp’s set:

“My manager (or someone in management) has shown a genuine interest in my career aspirations.”

CultureAmp mentions you should be worried when scoring below benchmark (65-75% range). We say, you should be worried when you score below what C-level has set as ambition with HR, and you as a manager of that department or team. Given the talent shortage, I must say I don’t care how other companies treat career development of their talents, as long as wé as a company do it our way.

Our take on this question to run via CircleLytics Dialogue:

“Do you experience that I show a genuine interest in your career aspirations. Can you score this question first, and then add your explanation or recommendation to learn from.”

The manager can add the following text in the second step via CircleLytics Dialogue:

“Here’s your invitation to read what coworkers think and feel about my interest in their career aspirations. Which answers do you support, which don’t you support? Any additional tips so I can change things for the better, or keep things that should be kept? Thanks in advance!”

The manager receives a Top 5 and Bottom 5 of answers to the question, including employees’ recommendations. There’s not even a need for managers to sit down with your whatever-number of employees. They were given an equal voice in this anonymous dialogue to help out with this very important question and subsequent step to learn from, reflect on and vote up/down what others say. This phenomenon of collective intelligence (two step dialogue) goes beyond individualistic intelligence (one step survey) by a 60% higher reliability and 90% faster time to action. The manager is instantly helped, employees learned from others, felt trusted and taken seriously. Ready for action.

This whole dialogue process is securing employees’ constant privacy. All is treated and processed anonymously. Why would you send engagement surveys that people can join anonymously and take privacy away from people when you want to deep-dive? After surveys, why would you require from managers to solve the red flags from survey outcomes “with their team without any privacy for people”? To us, that does not make sense for a few reasons:

- if privacy is needed for the survey, then have employees explaining in a team meeting, facing their manager, why they take a negative stance on something is for sure something that requires privacy. It doesn’t make sense to take away their privacy at this most vulnerable moment

- psychological safety is of the essence, and most leaders, HR and managers still have to tailor listening and other work processes to this much-needed thema

- dialogue and collecting diverse thoughts and perspectives is best served by anonymity. A good read is “On Dialogue” by David Bohm, or take a look at this video by Lorenzo Barberis, PhD, or the academic “Collective intelligence in humans: a literature overview”, by Salminen.

Do you know it takes companies on average 8 weeks or longer to followup on survey outcomes? This while the essence of listening and feedback is to put it to work within days and followup, not put it aside. Do you know on average no more than 1 out of 5 managers actually followups on the results? For this reason, we recommend deconstructing employee survey based listening, and reconstruct it again in the way we’re showing your right here.

In addition to CultureAmp, let’s examine a few questions by Qualtrics, ie the EX25 list of questions, on which list we notice:

“I feel energized at work.”

“I have trusting relationships at work.”

Here’s our redesign, but first of all we note that the question itself is influencing employees’ respons by stating ‘feel energized’ and ‘have trusting…’ instead of formulating these in a neutral way like this:

What can we learn about your recent level of energy at work, and can you explain this in your own words?

[at any closed scale of ‘low energy’ to ‘high energy’ and add a text field]

Or:

“I currently feel (yes/no) energized at work and my main reason for this is ….. ”

[at a scale of for example -3 representing no, to +3 representing yes, and add a text field]

Our design team at CircleLytics Dialogue strongly recommends to always ask a deliberate open-ended question, to extend and deepen your closed-end question.

So, instead of “I have trusting relationships at work” you better ask: “I have trusting relationships at work, at this moment, and here’s what it means to me.”. And include the second step: ask employees if they recognize/support what others say, and ask for any tip they might have.

Again, people can’t look back for weeks, let alone for months (let even more alone … a full year). To correctly compare results between responses, it’s consistent to ask everyone for their recent experience. Your results will then be comparable, since time frames are comparable. It doesn’t make sense to collect survey results without knowing what time frame employees are referring to when scoring your question low (or high).

What did we examine and learn till now about our consideration to stop survey based listening technology? Please contact us if you don’t follow or think differently.

- make questions specific instead of generic, except for maybe a few and frame these as research instead of listening and clarify the purpose of doing research

- have questions asked by managers or at least from their angle to humanize listening

- phrase questions in the ‘here and now’ or ‘future’; there’s where change and performance happen, not in the past: stop looking back unless you’re evaluating some project

- introduce a deliberate open-ended part in your question to spark people’s thinking

- add the second step to have open answers prioritized ánd enriched for fast actionability and benefit from the power of dialogue, hence learning from each other.

If you’re interested to convert from survey (one-step) based listening and move up the listening maturity ladder, to introduce dialogue (two-step) based listening and drive actionability? Just let us know, and trust us: it is easier than you imagine.